PowerPoint Presentation

Scene 1 (0s)

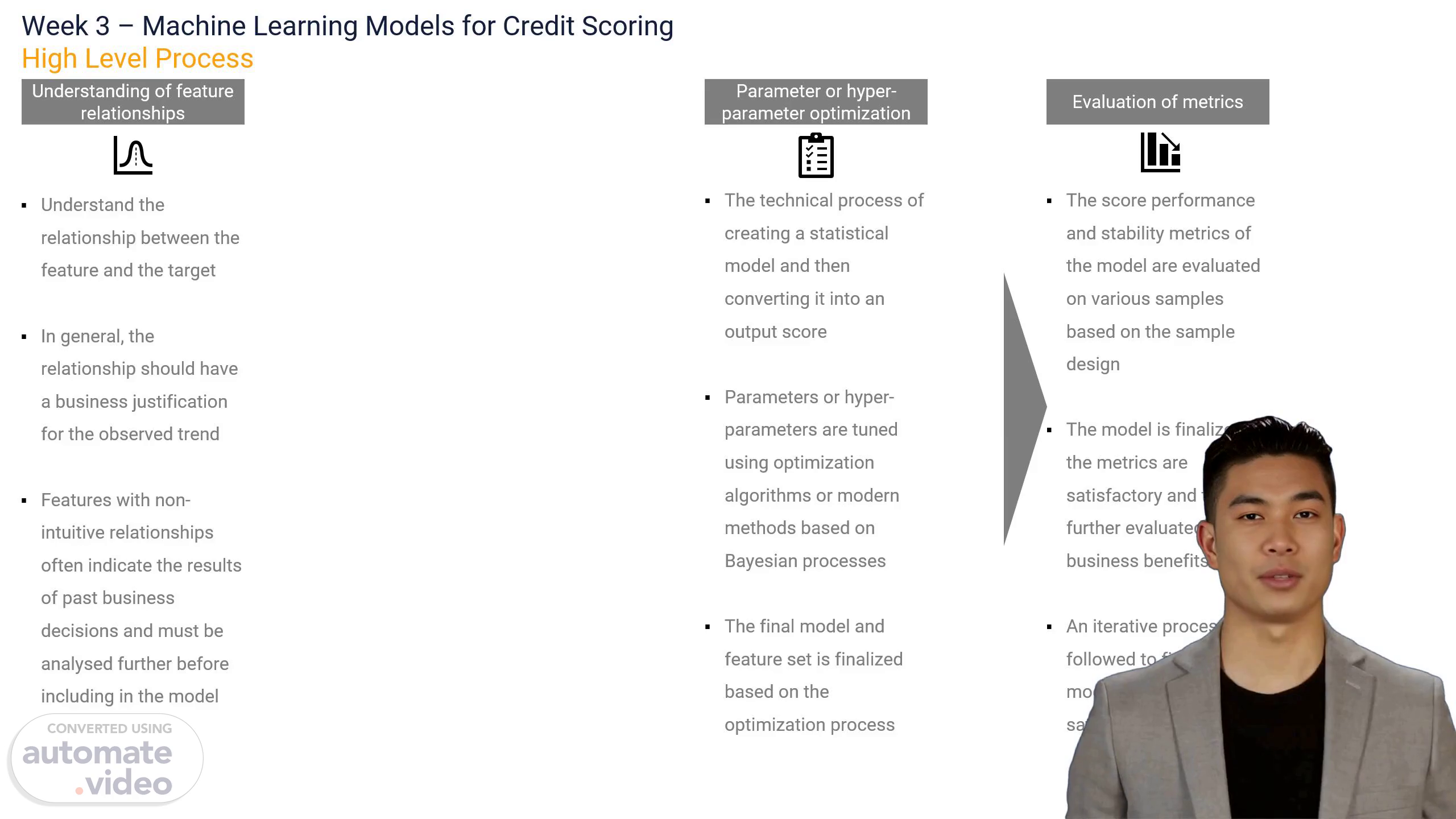

[Virtual Presenter] At we understand that creating machine learning models for credit scoring requires a high-level approach. Our process involves several steps including understanding the relationship between features and the target optimizing model parameters evaluating the model and fine-tuning to ensure score quality and stability. We begin by dividing our development data into samples to prevent over or under-fitting of the model. We then use training to tune parameters and select final features testing to evaluate the stability of parameters and evaluation metrics and out-of-time (O-O-T--) to test the stability of evaluation metrics in a different period. The technical process of creating a statistical model and converting it into an output score involves tuning parameters or hyperparameters using optimization algorithms or modern methods based on Bayesian processes. We then finalize the model and feature set based on the optimization process and evaluate the score performance and stability metrics on various samples based on the sample design. Finally we follow an iterative process to fine-tune the model until the metrics are satisfactory and evaluate the model for business benefits. Thank you for joining us on this tutorial. If you have any questions please feel free to reach out to us..

Scene 2 (1m 25s)

[Audio] We create machine learning models for credit scoring by identifying relevant data sources designing algorithms training models and evaluating performance..

Scene 3 (1m 35s)

[Audio] The process of creating an effective credit scoring model involves several steps beginning with understanding the relationship between features and the target variable. In this step we select the right features and determine their importance in predicting creditworthiness. We then optimize the model parameters by selecting the best algorithms adjusting hyperparameters and tuning the model to improve its performance. After optimizing the model we evaluate its performance using standard metrics such as A-U-C and Gini. These metrics help us determine the strength of our model and how well it can predict creditworthiness. Finally we fine-tune the model to ensure that it produces stable and accurate scores. In this slide we will discuss the cumulative percentage of good and bad records and how we can use this information to evaluate the strength of our credit scoring model. We will also explore the importance of standard metrics such as A-U-C and Gini in determining the strength of our model..

Scene 4 (2m 39s)

[Audio] Creating machine learning models for credit scoring is a complex process that involves understanding the relationship between features and the target optimizing model parameters evaluating the model fine-tuning and rank ordering. Rank ordering is an essential aspect of this process. It is a property of a score where the bad rate by score follows a monotonic trend. Rank ordering is often used to determine a cut-off for the score in other words all records above or below a certain threshold are subject to a particular credit risk decision based on the bad rate expected to be observed above or below that threshold. Bureau or alternate data scores which are generic in nature often rank order the records of different portfolios even though the bad rate for each score will depend on the overall portfolio bad rate to which it is applied. In this example a score is shown that differentiates the high and low risk records for two different portfolios – one with an average bad rate of ~49% (blue line) and the other with an average bad rate of ~4% (green line); however the same score value will have very different bad rates depending on the portfolio..

Scene 5 (3m 57s)

[Audio] Stability is a crucial element of credit scoring models. A stable score distribution ensures the reliability of the scores. To evaluate the consistency of score distribution in an out-of-time (O-O-T--) sample compared to the development period the Population Stability Index (P-S-I--) is a metric used. We visualize score stability using charts. The chart on the left shows a relatively stable score distribution in the O-O-T period with a slight shift towards higher score ranges. However the chart on the right shows a significantly altered score distribution in the O-O-T period with most records towards lower score ranges. To understand which score features are responsible for these changes we conduct feature-level analysis. This helps us identify the features that contribute to score stability or instability. Therefore stability must be carefully considered during the development process to guarantee the consistency and reliability of the score distribution..

Scene 6 (5m 1s)

[Audio] Creating machine learning models for credit scoring is a complex process that requires a high level of expertise. It involves understanding the relationship between features and the target optimizing model parameters evaluating the model and fine-tuning to ensure score quality and stability. When it comes to applications of these models there are several types of decisions that can be made including approving or rejecting a credit application based on the model's predictions. Swap In and Swap Out decisions are two types of decisions that can be made when implementing a new credit scoring process. A Swap In decision is when an application is rejected by the existing process but approved by the new process when the new process is within or close to the existing portfolio's bad rate. A Swap Out decision is when an application is approved by the existing process but rejected by the new process when the new process has a very high bad rate. These decisions can have a significant impact on the overall performance of the credit scoring model. It's important to carefully consider these decisions and their potential consequences when implementing a new credit scoring process..

Scene 7 (6m 12s)

[Audio] How do we create machine learning models for credit scoring? Our process involves understanding the relationship between features and the target optimizing model parameters evaluating the model and fine-tuning to ensure score quality and stability. Let's take an example to understand the process. Suppose we have a dataset of customers who applied for a loan 6 months ago and want to predict whether they will default on their loan or not based on certain features such as their credit score income and employment status. First we split the dataset into training and testing sets to optimize our model parameters. We also set up our evaluation metrics such as accuracy precision and recall to determine the performance of our model. Next we train our model on the training set and evaluate its performance on the testing set. Based on the evaluation results we may need to fine-tune our model parameters to improve its performance. Once we are satisfied with the performance of our model we can use it to predict whether new customers will default on their loans. For example suppose we have a new customer with a credit score of 700 an income of $50 000 per month and an employment status of self-employed. Based on our model we predict that this customer has a 2.5% chance of defaulting on their loan within the next 6 months..

Scene 8 (7m 37s)

[Audio] We presented a comprehensive guide on creating machine learning models for credit scoring. To ensure regulatory compliance machine learning models must be transparent and explainable. This allows financial institutions to identify potential sources of risk and manage them proactively. Model transparency is also crucial for building trust among stakeholders including regulators investors and borrowers. By providing consumers with insights into how their creditworthiness is assessed they can make informed decisions about managing their finances. To validate and govern machine learning models stakeholders must assess model performance detect biases and ensure model reliability and fairness. We discussed several techniques for interpreting machine learning models including basic “common sense” techniques such as feature importance and impact and modern analytical techniques such as global explanations local feature-based methods and instance-based methods. Thank you for listening..