Scene 1 (0s)

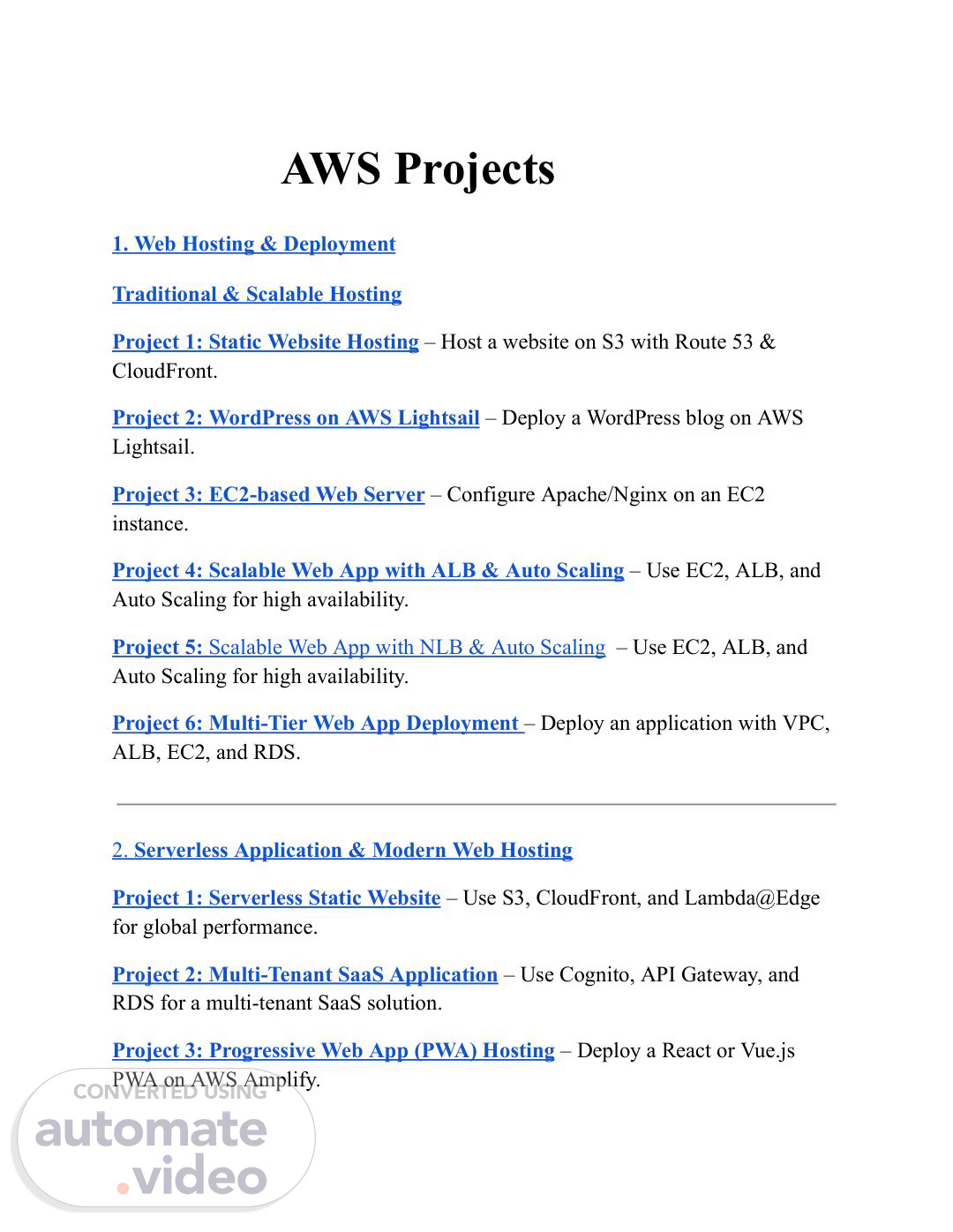

AWS Projects 1. Web Hosting & Deployment Traditional & Scalable Hosting Project 1: Static Website Hosting – Host a website on S3 with Route 53 & CloudFront. Project 2: WordPress on AWS Lightsail – Deploy a WordPress blog on AWS Lightsail. Project 3: EC2-based Web Server – Configure Apache/Nginx on an EC2 instance. Project 4: Scalable Web App with ALB & Auto Scaling – Use EC2, ALB, and Auto Scaling for high availability. Project 5: Scalable Web App with NLB & Auto Scaling – Use EC2, ALB, and Auto Scaling for high availability. Project 6: Multi-Tier Web App Deployment – Deploy an application with VPC, ALB, EC2, and RDS. 2. Serverless Application & Modern Web Hosting Project 1: Serverless Static Website – Use S3, CloudFront, and Lambda@Edge for global performance. Project 2: Multi-Tenant SaaS Application – Use Cognito, API Gateway, and RDS for a multi-tenant SaaS solution. Project 3: Progressive Web App (PWA) Hosting – Deploy a React or Vue.js PWA on AWS Amplify..

Scene 2 (6s)

Project 4: Jamstack Site with AWS – Use AWS Amplify, Headless CMS, and S3 for a Jamstack website. Project 5: AWS App Runner Deployment – Deploy a containerized web application with AWS App Runner. Project 6: Serverless API with Lambda & API Gateway – Build a RESTful API using Lambda. Project 7: GraphQL API with AWS AppSync – Deploy a GraphQL backend using DynamoDB. Project 8: Serverless Chatbot – Build an AI-powered chatbot with AWS Lex & Lambda. Project 9: Event-Driven Microservices – Use SNS & SQS for asynchronous microservices communication. Project 10: URL Shortener – Implement a Lambda-based URL shortener with DynamoDB. Project 11: Serverless Expense Tracker – Use Lambda, DynamoDB, and API Gateway to build an expense management app. Project 12: Serverless Image Resizer – Automatically resize images uploaded to an S3 bucket using Lambda. Project 13: Real-time Polling App – Use WebSockets with API Gateway and DynamoDB for a real-time polling system. Project 14: Serverless Sentiment Analysis – Analyze tweets using AWS Comprehend and store results in DynamoDB. 3. Infrastructure as Code (IaC) & Automation Project 1: Terraform for AWS – Deploy VPC, EC2, and RDS using Terraform..

Scene 3 (13s)

Project 2: CloudFormation for Infrastructure as Code – Automate AWS resource provisioning. Project 3: AWS CDK Deployment – Use AWS Cloud Development Kit (CDK) to manage cloud infrastructure. Project 4: Automate AWS Resource Tagging – Use AWS Lambda and Config Rules for enforcing tagging policies. Project 5: AWS Systems Manager for Automated Patching – Manage EC2 instance patching automatically. Project 6: Automated Cost Optimization – Use AWS Lambda to detect and stop unused EC2 instances. Project 7: Self-Healing Infrastructure – Use Terraform to create auto-healing EC2 instances with AWS Auto Scaling. Project 8: Event-Driven Infrastructure Provisioning – Use AWS EventBridge and CloudFormation to automate infrastructure deployment. Project 9: Automated EC2 Scaling Based on Load – Use Auto Scaling policies with CloudWatch to optimize cost and performance. 4. Security & IAM Management Project 1: IAM Role & Policy Management – Secure AWS resources using IAM policies. Project 2: AWS Secrets Manager for Credential Storage – Secure database credentials and API keys. Project 3: API Gateway Security – Implement authentication with Cognito and protection using AWS WAF. Project 4: AWS Security Hub & GuardDuty – Monitor security threats and enforce compliance..

Scene 4 (19s)

Project 5: Automated Compliance Auditing – Use AWS Config to check security best practices. Project 6: Zero Trust Security Implementation – Enforce strict security rules using AWS IAM and VPC security groups. Project 7: CloudTrail Logging & SIEM Integration – Integrate AWS CloudTrail logs with a SIEM tool for security monitoring. Project 8: AWS WAF for Application Security – Protect a web application from SQL injection and XSS attacks using AWS WAF. 5. CI/CD Projects: Project 1: AWS CodePipeline for Automated Deployments Project 2: GitHub Actions + AWS ECS Deployment Project 3: Jenkins CI/CD with Terraform on AWS Project 4: Kubernetes GitOps with ArgoCD on AWS EKS Project 5: Lambda-based Serverless CI/CD Project 6: Multi-Account CI/CD Pipeline with AWS CodePipeline Project 7: Containerized Deployment with AWS Fargate Project 8: Blue-Green Deployment on AWS ECS 6. DevOps Projects: Project 1: Infrastructure as Code (IaC) using Terraform on AWS Project 2: AWS Auto Scaling with ALB and CloudWatch Project 3: AWS Monitoring & Logging using ELK and CloudWatch.

Scene 5 (26s)

Project 4: Automated Patch Management with AWS SSM Project 5: AWS Security Hardening with IAM & Config Rules Project 6: Disaster Recovery Setup with AWS Backup & Route 53 Project 7: Cost Optimization using AWS Lambda to Stop Unused EC2s Project 8: Self-Healing Infrastructure with AWS Auto Scaling 7. Database & Storage Solutions Project 1: MySQL RDS Deployment – Set up and manage an RDS database. Project 2: DynamoDB CRUD Operations with Lambda – Create a serverless backend with DynamoDB. Project 3: Data Warehouse with AWS Redshift – Store and analyze structured data in Redshift. Project 4: ETL with AWS Glue – Transform data from S3 to Redshift using AWS Glue. Project 5: Graph Database with Amazon Neptune – Store relational data in a graph-based model. Project 6: Automated S3 Data Archival – Use S3 lifecycle rules to move data to Glacier. Project 7: Automated Database Migration – Use AWS Database Migration Service (DMS) to migrate a database from MySQL to Aurora. 8. Monitoring & Logging Project 1: Monitor AWS Resources with CloudWatch & SNS – Set up alerts for EC2, RDS, and S3..

Scene 6 (32s)

Project 2: Log Analysis with ELK Stack – Deploy Elasticsearch, Logstash, and Kibana on AWS. Project 3: Serverless URL Monitor – Use Lambda & CloudWatch to check website uptime. Project 4: Query S3 Logs with AWS Athena – Analyze stored logs without setting up a database. Project 5: Application Performance Monitoring – Use AWS X-Ray to trace application requests and optimize performance. 9. Networking & Connectivity Project 1: Route 53 DNS Management – Configure and manage DNS records for custom domains. Project 2: AWS Transit Gateway for Multi-VPC Communication – Connect multiple AWS VPCs securely. Project 3: Kubernetes on AWS (EKS) – Deploy a containerized workload using Amazon EKS. Project 4: Hybrid Cloud Setup with AWS VPN – Connect an on-premises data center to AWS. 10. Data Processing & Analytics Project 1: Big Data Processing with AWS EMR – Use Apache Spark to analyze large datasets. Project 2: Real-Time Data Streaming with Kinesis – Process IoT or social media data in real time..

Scene 7 (38s)

Project 3: AWS Quicksight for BI Dashboard – Visualize business data using Amazon QuickSight. Project 4: AWS Athena for Log Analysis – Query CloudTrail and VPC Flow Logs using Athena. 11. AI/ML & IoT Project 1: Train & Deploy ML Models with SageMaker – Use AWS SageMaker for predictive analytics. Project 2: Fraud Detection System – Build an ML-based fraud detection model on AWS. Project 3: AI-Powered Image Recognition – Use AWS Rekognition to analyze images. Project 4: IoT Data Processing with AWS IoT Core – Store and analyze IoT sensor data in AWS. 12. Disaster Recovery & Backup Project 1: Multi-Region Disaster Recovery Plan – Set up cross-region replication for S3 & RDS. Project 2: AWS Backup for EBS Snapshots & Recovery – Automate backups and restore EC2 instances. Project 3: Automated AMI Creation & Cleanup – Schedule AMI snapshots and delete old backups. 1. Web Hosting & Deployment.

Scene 8 (44s)

Traditional & Scalable Hosting Project 1: Static Website Hosting – Host a website on S3 with Route 53 & CloudFront. Static Website Hosting using S3, Route 53 & CloudFront – Complete Steps Hosting a static website on Amazon S3, integrating it with Route 53 for domain name resolution, and using CloudFront for content delivery enhances performance, security, and reliability. Prerequisites: ✅ An AWS account ✅ A registered domain (either in Route 53 or another provider) Step 1: Create an S3 Bucket for Website Hosting 1. Open the AWS S3 Console and click Create bucket. 2. Bucket name: Enter your domain name (e.g., example.com). 3. Region: Select your preferred AWS region. 4. Disable Block Public Access: ○ Uncheck "Block all public access" and confirm changes. 5. Enable Static Website Hosting: ○ Go to the Properties tab. ○ Click Edit under Static website hosting. ○ Select Enable. ○ Choose "Host a static website". ○ Set index document as index.html. ○ Note the Bucket Website Endpoint URL. 6. Click Create bucket..

Scene 9 (50s)

Step 2: Upload Website Files 1. Open your S3 bucket. 2. Click Upload → Add your index.html, style.css, script.js, etc. 3. Click Upload. Step 3: Configure S3 Bucket Policy for Public Access 1. Go to the Permissions tab. Under Bucket Policy, click Edit and paste the following JSON policy: json ] } 2. Replace example.com with your bucket name. 3. Click Save changes..

Scene 10 (55s)

Step 4: Register a Domain (if needed) in Route 53 1. Open AWS Route 53 Console. 2. Click Domains → Register Domain. 3. Search for and purchase a domain (e.g., example.com). 4. Wait for AWS to complete registration. Step 5: Create a Hosted Zone in Route 53 1. In Route 53, go to Hosted Zones. 2. Click Create hosted zone. 3. Enter your domain name (example.com). 4. Choose Public Hosted Zone. 5. Click Create. Step 6: Set Up CloudFront for Content Delivery 1. Open AWS CloudFront Console. 2. Click Create distribution. 3. Under Origin, configure: ○ Origin domain: Select your S3 bucket. ○ Origin access: Select Public. ○ Viewer Protocol Policy: Choose Redirect HTTP to HTTPS. 4. Alternate Domain Names (CNAMEs): ○ Enter your domain name (example.com). 5. Custom SSL Certificate: ○ Click Request or Import a Certificate with ACM. ○ Request an SSL certificate for your domain. ○ Validate via email or DNS. ○ Once issued, attach it to the CloudFront distribution..

Scene 11 (1m 2s)

6. Click Create distribution and wait for deployment. Step 7: Update Route 53 DNS Records 1. Go to Route 53 → Hosted Zone → Select your domain. 2. Click Create record. 3. Select Simple routing → Define simple record. 4. Record Name: Leave empty (for root domain). 5. Record Type: Select A – IPv4 Address. 6. Route Traffic To: Choose Alias to CloudFront Distribution. 7. Select your CloudFront distribution from the dropdown. 8. Click Create record. Step 8: Verify and Test the Website ● Open a browser and visit your domain (https://example.com). ● If it doesn't work immediately, wait for DNS propagation (~30 mins to a few hours). Summary of What We Did: ✅ Created an S3 bucket for hosting static website files. ✅ Configured public access & bucket policy for static hosting. ✅ Registered a domain and set up a hosted zone in Route 53. ✅ Created a CloudFront distribution for security & performance. ✅ Configured Route 53 DNS records to point the domain to CloudFront. ✅ Secured the website with SSL (HTTPS) using AWS ACM. 🚀 Your static website is now hosted on AWS S3, accessible via a custom domain with CloudFront caching!.

Scene 12 (1m 9s)

Project 2: WordPress on AWS Lightsail – Deploy a WordPress blog on AWS Lightsail. Deploying a WordPress Blog on AWS Lightsail AWS Lightsail is a cost-effective and beginner-friendly platform for deploying applications, including WordPress. Lightsail simplifies cloud hosting by providing pre-configured virtual private servers (VPS), making it ideal for WordPress blogs. Steps to Deploy WordPress on AWS Lightsail 1. Sign in to AWS and Navigate to Lightsail ● Go to AWS Lightsail Console. ● Click on "Create instance" to set up a new server. 2. Choose an Instance Location ● Select the region closest to your target audience for better performance. 3. Select an Instance Image ● Choose "Apps + OS", then select WordPress (which comes pre-installed). ● Alternatively, you can choose Linux/Unix + WordPress if you want more customization. 4. Choose an Instance Plan ● Select a pricing plan based on your expected traffic. ● For a small blog, the $5/month plan is a good starting point. 5. Name and Create the Instance ● Give your instance a meaningful name (e.g., my-wordpress-blog). ● Click "Create instance", and wait for AWS to provision it. 6. Access Your WordPress Site.

Scene 13 (1m 16s)

● Once the instance is running, go to the Networking tab in Lightsail. ● Copy the public IP address and paste it into your browser to open your WordPress site. 7. Retrieve WordPress Admin Credentials ● Connect to your instance using SSH (Click “Connect using SSH” in Lightsail). Run the following command to get your WordPress admin password: cat bitnami_application_password ● Note the password and use it to log in at http://your-public-ip/wp-admin/. 8. Configure Static IP (Recommended) ● Go to the Networking tab and create a Static IP to prevent your site from changing its IP on reboot. ● Attach the static IP to your instance. 9. Configure a Custom Domain (Optional) ● Register a domain from AWS Route 53 or any domain provider. ● Update your domain’s A record to point to your static IP. 10. Enable SSL for HTTPS (Recommended) Install a free SSL certificate using Let’s Encrypt with the following commands: sudo /opt/bitnami/bncert-tool ● Follow the prompts to enable HTTPS. 11. Secure and Optimize WordPress ● Update WordPress, themes, and plugins. ● Use security plugins like Wordfence. ● Optimize performance with caching plugins like W3 Total Cache or LiteSpeed Cache..

Scene 14 (1m 23s)

12. Backup Your Instance (Recommended) ● In Lightsail, go to the Snapshots tab and create a backup. ● You can also enable automatic snapshots. Final Thoughts Deploying WordPress on AWS Lightsail is a fast and cost-effective way to start a blog. With a few additional steps like securing your site with SSL, setting up a static IP, and using a custom domain, you can create a professional and reliable WordPress website. Project 3: EC2-based Web Server – Configure Apache/Nginx on an EC2 instance. In this project, you will deploy a web server using Amazon EC2. You will launch an EC2 instance, install either Apache or Nginx, and configure it to serve a basic website. Steps to Set Up an EC2-based Web Server Step 1: Launch an EC2 Instance 1. Go to the AWS Management Console → EC2. 2. Click Launch Instance. 3. Configure the instance: ○ Name: MyWebServer ○ AMI: Choose Amazon Linux 2 or Ubuntu ○ Instance Type: t2.micro (Free Tier eligible) ○ Key Pair: Create a new key pair or use an existing one. ○ Security Group: Allow SSH (port 22) and HTTP (port 80). 4. Click Launch..

Scene 15 (1m 30s)

Step 2: Connect to the EC2 Instance Open a terminal and run: ssh -i your-key.pem ec2-user@your-instance-public-ip 1. (For Ubuntu, use ubuntu instead of ec2-user) Step 3: Install Apache or Nginx For Apache (httpd) Update the package repository: sudo yum update -y # Amazon Linux sudo apt update -y # Ubuntu Install Apache: sudo yum install httpd -y # Amazon Linux sudo apt install apache2 -y # Ubuntu Start and enable Apache: sudo systemctl start httpd # Amazon Linux sudo systemctl enable httpd # Amazon Linux sudo systemctl start apache2 # Ubuntu sudo systemctl enable apache2 # Ubuntu.

Scene 16 (1m 34s)

Verify installation: sudo systemctl status httpd # Amazon Linux sudo systemctl status apache2 # Ubuntu For Nginx Install Nginx: sudo yum install nginx -y # Amazon Linux sudo apt install nginx -y # Ubuntu Start and enable Nginx: sudo systemctl start nginx sudo systemctl enable nginx Verify installation: sudo systemctl status nginx Step 4: Configure Firewall Allow HTTP traffic: sudo firewall-cmd --permanent --add-service=http sudo firewall-cmd --reload (For Ubuntu, this step is not needed if using a security group in AWS) Step 5: Deploy a Web Page.

Scene 17 (1m 38s)

Edit the default index file: sudo echo "<h1>Welcome to My Web Server</h1>" > /var/www/html/index.html 1. (For Nginx, use /usr/share/nginx/html/index.html) Step 6: Access the Web Server Open a browser and go to: http://your-instance-public-ip 1. You should see "Welcome to My Web Server". Step 7: Configure Auto Start (Optional) Ensure the web server starts on reboot: sudo systemctl enable httpd # Apache sudo systemctl enable nginx # Nginx Conclusion You have successfully deployed an EC2-based web server running Apache or Nginx. This setup is commonly used for hosting websites, web applications, or acting as a reverse proxy. Project 4: Scalable Web App with ALB & Auto Scaling – Use EC2, ALB, and Auto Scaling for high availability. This project involves deploying a highly available web application using Amazon EC2, Application Load Balancer (ALB), and Auto Scaling. The goal is to.

Scene 18 (1m 44s)

ensure that the web app can scale automatically based on traffic demand while maintaining availability. Steps to Deploy a Scalable Web App Step 1: Create a Launch Template 1. Go to AWS Console → EC2 → Launch Templates → Create Launch Template. 2. Configure: ○ Name: WebAppTemplate ○ AMI: Amazon Linux 2 or Ubuntu ○ Instance Type: t2.micro (Free Tier) or t3.medium ○ Key Pair: Select an existing key pair or create a new one. User Data (Optional, for auto-installation of Apache/Nginx): #!/bin/ sudo yum update -y sudo yum install httpd -y sudo systemctl start httpd sudo systemctl enable httpd echo "<h1>Welcome to Scalable Web App</h1>" | sudo tee /var/www/html/index.html ○ Security Group: Allow SSH (22), HTTP (80), and HTTPS (443). 3. Click Create Launch Template..

Scene 19 (1m 49s)

Step 2: Create an Auto Scaling Group 1. Go to EC2 → Auto Scaling Groups → Create Auto Scaling Group. 2. Choose Launch Template: Select WebAppTemplate. 3. Configure Group Size: ○ Desired Capacity: 2 ○ Minimum Instances: 1 ○ Maximum Instances: 4 4. Select Network: ○ Choose an existing VPC. ○ Select at least two subnets across different Availability Zones (AZs). 5. Attach Load Balancer: ○ Choose Application Load Balancer (ALB). ○ Create Target Group: ■ Target Type: Instance ■ Protocol: HTTP ■ Health Check: / ○ Register instances later (Auto Scaling will handle this). 6. Set Scaling Policies (Optional): ○ Enable Auto Scaling based on CPU utilization. ○ Example Policy: Scale out when CPU > 60%, Scale in when CPU < 40%. 7. Click Create Auto Scaling Group. Step 3: Create an Application Load Balancer (ALB) 1. Go to EC2 → Load Balancers → Create Load Balancer. 2. Select Application Load Balancer. 3. Configure Basic Settings: ○ Name: WebAppALB ○ Scheme: Internet-facing ○ VPC: Select the same VPC as Auto Scaling. ○ Availability Zones: Choose at least 2. 4. Configure Listeners:.

Scene 20 (1m 56s)

○ Protocol: HTTP ○ Port: 80 5. Target Group: ○ Select existing Target Group (from Auto Scaling Group). 6. Security Group: ○ Allow HTTP (80). 7. Click Create Load Balancer. Step 4: Test the Setup 1. Get ALB DNS Name: ○ Go to EC2 → Load Balancers → Copy the ALB DNS Name. Open a browser and enter: http://your-alb-dns-name You should see: Welcome to Scalable Web App Step 5: Verify Auto Scaling 1. Increase Load to Trigger Scaling: ○ Use a tool like Apache Benchmark or manually increase traffic. Run: ab -n 1000 -c 50 http://your-alb-dns-name/ ○ Check EC2 → Auto Scaling Group to see if new instances launch. 2. Stop an Instance: ○ Manually stop an instance to verify if Auto Scaling replaces it..

Scene 21 (2m 2s)

Conclusion This setup ensures: ✅ High Availability using ALB ✅ Scalability using Auto Scaling ✅ Redundancy across multiple AZs This architecture is commonly used for production applications that need reliability and cost optimization. Project 5: Scalable Web App with NLB & Auto Scaling – Use EC2, ALB, and Auto Scaling for high availability. Overview: In this project, we will deploy a scalable and highly available web application using EC2 instances, Network Load Balancer (NLB), and Auto Scaling. This setup ensures that our web application can handle varying traffic loads efficiently while maintaining availability and reliability. Steps to Implement the Project 1. Set Up EC2 Instances ● Launch multiple EC2 instances with a web server installed (e.g., Apache, Nginx, or a custom application). ● Configure the security group to allow HTTP (port 80) and necessary ports for application communication. ● Ensure the web server is properly configured to serve requests. 2. Create a Network Load Balancer (NLB) ● Navigate to EC2 > Load Balancers > Create Load Balancer. ● Select Network Load Balancer as the type. ● Choose the public subnets where your EC2 instances are running..

Scene 22 (2m 8s)

● Create a target group and register the EC2 instances. ● Configure the listener on port 80 (HTTP) and forward traffic to the target group. 3. Configure Auto Scaling Group ● Create an Auto Scaling Group with a Launch Template or Launch Configuration that defines: ○ EC2 instance type, AMI, security group, and key pair. ○ User data script to install the web server upon launch. ● Set the desired minimum, maximum, and desired capacity for instances. ● Define scaling policies to increase/decrease instances based on CPU utilization or request load. 4. Enable Health Checks & Monitoring ● Configure NLB health checks to monitor instance health. ● Enable Auto Scaling health checks to replace unhealthy instances automatically. ● Use Amazon CloudWatch to track metrics like request count, CPU utilization, and instance activity. 5. Test the Setup ● Obtain the NLB DNS name and test the application in a browser. ● Simulate traffic using Apache Benchmark (ab) or Siege to verify Auto Scaling behavior. ● Monitor the Auto Scaling Group to ensure new instances launch and terminate as needed. 6. Optimize & Secure the Architecture ● Enable HTTPS using an AWS Certificate Manager (ACM) SSL certificate. ● Implement IAM roles for secure EC2 access. ● Use AWS WAF to protect against web threats. ● Enable CloudWatch alarms for proactive monitoring..

Scene 23 (2m 16s)

Outcome: ● A fully scalable, high-availability web application with automatic load balancing and scaling. ● Improved performance, fault tolerance, and cost optimization through Auto Scaling. ● Seamless user experience even under varying traffic conditions. Project 6: Multi-Tier Web App Deployment – Deploy an application with VPC, ALB, EC2, and RDS. A Multi-Tier Web Application architecture enhances scalability, security, and maintainability by separating concerns into multiple layers. This project deploys a web application on AWS using a Virtual Private Cloud (VPC), Application Load Balancer (ALB), EC2 instances for the web and application layers, and RDS (Relational Database Service) for the database layer. Complete Steps for Deployment Step 1: Set Up the VPC 1. Go to the AWS Management Console → VPC. 2. Create a new VPC (e.g., 10.0.0.0/16). 3. Create two subnets: ○ Public Subnet (for ALB & Bastion Host) (e.g., 10.0.1.0/24). ○ Private Subnet (for EC2 App & RDS) (e.g., 10.0.2.0/24). 4. Create an Internet Gateway (IGW) and attach it to the VPC. 5. Set up Route Tables: ○ Public subnet → Route to IGW. ○ Private subnet → Route to NAT Gateway (for internet access from private instances)..

Scene 24 (2m 24s)

Step 2: Launch EC2 Instances 1. Go to EC2 Dashboard → Launch Instance. 2. Create a Bastion Host in the public subnet for SSH access. 3. Create Web Server EC2 instances in the private subnet. Install required software on EC2 instances: sudo yum update -y sudo yum install -y httpd mysql sudo systemctl start httpd sudo systemctl enable httpd 4. Repeat for multiple instances if needed for load balancing. Step 3: Set Up Application Load Balancer (ALB) 1. Go to EC2 Dashboard → Load Balancer → Create Load Balancer. 2. Choose Application Load Balancer. 3. Attach it to the public subnet. 4. Create a target group and register EC2 instances (web/app tier). 5. Configure health checks (/health endpoint). 6. Update Security Groups to allow HTTP/HTTPS. Step 4: Deploy the Database (RDS) 1. Go to RDS → Create Database. 2. Select MySQL/PostgreSQL. 3. Choose Multi-AZ Deployment (for high availability). 4. Set up database credentials. 5. Place RDS in the private subnet. 6. Update security groups to allow only EC2 instances to connect..

Scene 25 (2m 31s)

Step 5: Configure Security Groups ● ALB Security Group → Allow HTTP (80) and HTTPS (443) from the internet. ● Web/App EC2 Security Group → Allow HTTP (80) only from the ALB. ● Database Security Group → Allow MySQL/PostgreSQL (3306) only from the Web/App layer. Step 6: Deploy the Web Application Upload application code to EC2: sudo yum install -y git git clone <your-repository> cd <your-app> 1. Configure the database connection in your app. 2. Start the application. Step 7: Testing & Scaling 1. Test the Application ○ Get the ALB DNS name and access the web app via a browser. 2. Enable Auto Scaling ○ Go to EC2 Auto Scaling Groups. ○ Configure a launch template for EC2 instances. ○ Set up scaling policies (e.g., scale up on high CPU usage). Conclusion.

Scene 26 (2m 36s)

This setup provides a scalable, highly available, and secure multi-tier web application architecture on AWS. It ensures redundancy using ALB, Auto Scaling, and RDS Multi-AZ, making it resilient to failures. 2. Serverless & Modern Web Hosting Project 1: Serverless Static Website – Use S3, CloudFront, and Lambda@Edge for global performance. A serverless static website on AWS eliminates the need for traditional web servers by using Amazon S3 for hosting static content, CloudFront for global content delivery, and Lambda@Edge for dynamic content processing. This setup provides high availability, low latency, and cost efficiency. Steps to Deploy a Serverless Static Website on AWS Step 1: Create an S3 Bucket for Website Hosting 1. Open the AWS Management Console → Navigate to S3. 2. Click Create bucket, enter a unique name, and choose the region. 3. Uncheck "Block all public access" (if you want a public website). 4. Upload your static website files (HTML, CSS, JS, images). 5. Enable Static website hosting under Properties: ○ Choose "Enable". ○ Set index.html as the default document. ○ Copy the Bucket Website URL. Step 2: Configure Bucket Policy for Public Access 1. Go to Permissions → Click Bucket Policy. Add the following policy to make the website publicly accessible: json.

Scene 27 (2m 44s)

] } 2. Save the policy. Step 3: Create a CloudFront Distribution 1. Go to CloudFront in AWS Console. 2. Click Create Distribution. 3. Under Origin, select your S3 bucket (not the website endpoint, but the bucket itself). 4. Enable "Redirect HTTP to HTTPS". 5. Set Caching Policy to CachingOptimized (for better performance). 6. Click Create and note the CloudFront URL..

Scene 28 (2m 48s)

Step 4: (Optional) Use Lambda@Edge for Dynamic Processing If you need to modify responses dynamically (e.g., adding security headers, URL rewrites), create a Lambda@Edge function. 1. Go to Lambda → Click Create Function. 2. Choose Author from Scratch → Select Node.js or Python. 3. Enable Lambda@Edge (set deployment to us-east-1). Add this sample script to modify HTTP headers: javascript exports.handler = async (event) =>]; return response; }; 4. Deploy and associate it with CloudFront behavior. Step 5: (Optional) Use a Custom Domain with Route 53 1. Register a domain using Route 53 (or use an existing domain). 2. Create a CNAME record pointing to your CloudFront distribution. 3. Use AWS Certificate Manager (ACM) to enable HTTPS with SSL. Conclusion.

Scene 29 (2m 54s)

With this setup, your static website is hosted on S3, served globally using CloudFront, and can be enhanced with Lambda@Edge for additional processing. This architecture ensures scalability, security, and cost efficiency. Project 2: Multi-Tenant SaaS Application – Use Cognito, API Gateway, and RDS for a multi-tenant SaaS solution. A Multi-Tenant SaaS Application allows multiple customers (tenants) to use the same application while keeping their data secure and isolated. AWS provides a scalable solution using Cognito for authentication, API Gateway for managing API requests, and RDS for structured data storage. This setup ensures security, performance, and scalability for your SaaS platform. Steps to Deploy a Multi-Tenant SaaS Application on AWS 1. Set Up AWS Cognito for User Authentication ● Go to the AWS Cognito service in the AWS Console. ● Create a User Pool to manage authentication for tenants. ● Enable Multi-Tenant Support using custom attributes (e.g., tenant_id). ● Configure authentication providers (Google, Facebook, or SAML-based authentication if needed). ● Create an App Client to integrate with your application. 2. Create an RDS Database for Multi-Tenant Data Storage ● Go to Amazon RDS and create a new database (PostgreSQL or MySQL recommended). ● Choose an instance type based on the expected load. ● Configure a multi-tenant database model: ○ Single database, shared schema (add tenant_id column in tables). ○ Single database, separate schema per tenant..

Scene 30 (3m 2s)

○ Database per tenant (useful for large enterprises). 3. Build APIs Using AWS API Gateway and Lambda ● Go to Amazon API Gateway and create a new REST API. ● Define API routes for different functionalities (e.g., /users, /orders). ● Use Cognito Authorizer to secure API access. ● Deploy Lambda functions to handle requests: ○ Create Lambda functions to interact with the RDS database. ○ Implement tenant isolation logic (ensure each tenant can access only ○ atus } } Use the listTasks query to retrieve all tasks: graphql query }.

Scene 31 (3m 6s)

Step 6: Secure the API (Optional) ● Use AWS Cognito for user authentication. ● Enable IAM authentication for secure access. Conclusion You have successfully deployed a GraphQL API using AWS AppSync and DynamoDB. This setup allows seamless CRUD operations with real-time updates, making it an efficient backend solution for modern applications. Project 3: Progressive Web App (PWA) Hosting – Deploy a React or Vue.js PWA on AWS Amplify. Introduction A Progressive Web App (PWA) combines the best of web and mobile applications by offering fast load times, offline support, and a native-like experience. AWS Amplify provides an easy way to deploy and host PWAs built with React or Vue.js, with automatic CI/CD integration. Steps to Deploy a React or Vue.js PWA on AWS Amplify Step 1: Prerequisites ● AWS account ● Node.js installed (check with node -v) ● GitHub, GitLab, or Bitbucket repository with a React or Vue.js PWA.

Scene 32 (3m 12s)

Step 2: Create a React or Vue.js PWA (Optional) For React: sh npx create-react-app my-pwa --template cra-template-pwa cd my-pwa npm start For Vue.js: sh npm create vue@latest my-pwa cd my-pwa npm install npm run dev Step 3: Push the Project to GitHub sh git init.

Scene 33 (3m 15s)

git add . git commit -m "Initial PWA commit" git branch -M main git remote add origin <your-repository-url> git push -u origin main Step 4: Set Up AWS Amplify for Hosting 1. Go to AWS Amplify Console ○ Navigate to AWS Amplify Console. ○ Click "Get Started" under "Deploy". 2. Connect to Your Git Repository ○ Select GitHub/GitLab/Bitbucket. ○ Authenticate and choose your repository. ○ Select the branch (e.g., main). 3. Configure Build Settings ○ AWS Amplify will detect the framework (React/Vue). ○ Modify amplify.yml if needed for custom build settings. Step 5: Deploy the PWA ● Click "Save and Deploy". ● AWS Amplify will build and deploy the PWA. ● Once deployment is complete, you will get a hosted URL (e.g., https://your-app.amplifyapp.com). Step 6: Enable PWA Features in AWS Amplify.

Scene 34 (3m 21s)

1. Ensure the Web App Has a Manifest File ○ React PWA template includes public/manifest.json. ○ Vue.js PWAs should have a manifest.json file in public/. 2. Check Service Worker Configuration ○ React PWA uses src/service-worker.js. ○ Vue.js PWAs can use Workbox for service workers. 3. Set Up HTTPS (Mandatory for PWAs) ○ AWS Amplify automatically enables HTTPS. ○ You can also connect a custom domain. Step 7: Verify and Test the PWA ● Open the hosted URL in Chrome. ● Use Lighthouse in DevTools (Ctrl + Shift + I → "Lighthouse" tab → Generate Report). ● Check if the app is installable and supports offline usage. Conclusion You have successfully deployed a React or Vue.js PWA on AWS Amplify! This setup ensures seamless CI/CD, HTTPS, and scalability for your PWA Project 4: Jamstack Site with AWS – Use AWS Amplify, Headless CMS, and S3 for a Jamstack website. Build a modern, fast, and scalable Jamstack website using AWS Amplify, a Headless CMS, and S3 for static hosting. This approach decouples the frontend from the backend, improving performance, security, and scalability. Steps to Build the Jamstack Site with AWS.

Scene 35 (3m 28s)

Step 1: Set Up AWS Amplify 1. Sign in to the AWS Management Console. 2. Navigate to AWS Amplify and create a new project. 3. Connect a GitHub repository containing your frontend code (React, Vue, or Next.js). 4. Configure Amplify for continuous deployment. Step 2: Choose a Headless CMS 1. Select a Headless CMS like Strapi, Contentful, or Sanity. 2. Create a new project and define content models (e.g., blog posts, pages). 3. Publish and retrieve content via the CMS API. Step 3: Configure AWS S3 for Static Hosting 1. Open the AWS S3 Console and create a new bucket. 2. Enable static website hosting in the bucket settings. 3. Upload your pre-built static files if using S3 as an alternative deployment option. Step 4: Connect CMS with Frontend 1. Fetch data from the CMS using REST or GraphQL APIs. 2. Display dynamic content on your website using API calls. Step 5: Deploy and Test the Website 1. Deploy the frontend via AWS Amplify or S3. 2. Enable CDN via AWS CloudFront for fast global access. 3. Test your website and verify performance. Step 6: Set Up Custom Domain and SSL 1. Configure a custom domain in AWS Route 53. 2. Use AWS Certificate Manager (ACM) to enable HTTPS..

Scene 36 (3m 36s)

Conclusion This project helps create a fast, secure, and scalable Jamstack website using AWS services. With AWS Amplify for deployment, a Headless CMS for content management, and S3 for storage, you ensure a seamless user experience Project 5: AWS App Runner Deployment – Deploy a containerized web application with AWS App Runner. Introduction: AWS App Runner is a fully managed service that simplifies deploying containerized web applications without managing infrastructure. It automatically builds and scales applications from container images stored in Amazon ECR or directly from source code in GitHub. This project will guide you through deploying a containerized web application using AWS App Runner. Complete Steps: Step 1: Prerequisites ● AWS Account ● Docker installed on your local machine ● AWS CLI configured ● A containerized web application (e.g., a simple Node.js or Python Flask app) Step 2: Create and Build a Docker Image 1. Write a simple web application (e.g., Node.js, Flask). Create a Dockerfile in your project directory. Example for Node.js: dockerfile FROM node:18.

Scene 37 (3m 43s)

WORKDIR /app COPY . . RUN npm install CMD ["node", "app.js"] EXPOSE 8080 2. Build the Docker image: sh docker build -t my-app-runner-app . 3. Step 3: Push Image to Amazon Elastic Container Registry (ECR) Create an ECR repository: sh aws ecr create-repository --repository-name my-app-runner-app 1. Authenticate Docker with ECR: sh aws ecr get-login-password --region us-east-1 | docker login --username AWS --password-stdin <AWS_ACCOUNT_ID>.dkr.ecr.us-east-1.amazonaws.com 2. Tag the image: sh.

Scene 38 (3m 47s)

docker tag my-app-runner-app:latest <AWS_ACCOUNT_ID>.dkr.ecr.us-east-1.amazonaws.com/my-app-runner-app:lat est 3. Push the image to ECR: sh docker push <AWS_ACCOUNT_ID>.dkr.ecr.us-east-1.amazonaws.com/my-app-runner-app:lat est 4. Step 4: Deploy to AWS App Runner 1. Go to AWS Console > App Runner. 2. Click Create an App Runner Service. 3. Select Container Registry and choose Amazon ECR. 4. Select the repository and image. 5. Configure the service: ○ Set a service name (e.g., my-app-runner-service). ○ Set port to 8080 (or as per your application). ○ Select Auto Scaling (default settings). 6. Click Deploy and wait for App Runner to set up the service. Step 5: Access the Application ● Once deployment is complete, AWS App Runner provides a public URL. ● Open the URL in a browser to verify the application is running. Step 6: Monitor & Update the Service ● Use AWS App Runner logs and metrics to monitor your service. ● Update your image in ECR and redeploy for changes. Conclusion.

Scene 39 (3m 54s)

AWS App Runner makes it easy to deploy and scale containerized applications without managing infrastructure. It’s ideal for developers who want a serverless experience for their web apps. Project 6: Serverless API with Lambda & API Gateway – Build a RESTful API using Lambda. This project involves building a RESTful API using AWS Lambda and API Gateway. It eliminates the need for managing servers, providing a scalable and cost-effective solution. Lambda handles the backend logic, while API Gateway routes requests and manages security. Steps to Build the Serverless API Step 1: Create an AWS Lambda Function ● Go to the AWS Lambda console. ● Click Create Function → Select Author from Scratch. ● Enter a function name (e.g., ServerlessAPI). ● Choose Runtime as Python 3.x or Node.js 18.x. ● Select Create a new role with basic Lambda permissions. ● Click Create Function. In the function editor, add this sample code: Python Example (app.py) python import json def lambda_handler(event, context): return {.

Scene 40 (4m 0s)

"statusCode": 200, "body": json.dumps() } ● ● Click Deploy. Step 2: Create an API in API Gateway ● Go to AWS API Gateway → Click Create API. ● Choose REST API → Click Build. ● Select Regional and click Create API. ● Click Actions → Create Resource. ● Enter a name (e.g., hello) and click Create Resource. ● Select the resource and click Create Method → Choose GET. ● Select Lambda Function as the integration type. ● Enter the Lambda function name created earlier. ● Click Save → Confirm by clicking OK. Step 3: Deploy the API ● Click Actions → Deploy API. ● Create a new stage (e.g., prod). ● Click Deploy. ● Copy the Invoke URL (e.g., https://xyz.execute-api.region.amazonaws.com/prod/hello). Step 4: Test the API Open a browser or use cURL/Postman: sh.

Scene 41 (4m 6s)

curl -X GET https://xyz.execute-api.region.amazonaws.com/prod/hello ● You should receive the JSON response: json ● Enhancements (Optional) ● Use DynamoDB for persistent storage. ● Implement JWT authentication with Amazon Cognito. ● Enable CORS for cross-origin access. This setup provides a scalable, serverless API without provisioning servers. Project 7: GraphQL API with AWS AppSync – Deploy a GraphQL backend using DynamoDB. Introduction AWS AppSync is a fully managed service that simplifies the development of GraphQL APIs. It allows real-time data access and offline support. In this project, you will deploy a GraphQL API using AWS AppSync, with DynamoDB as the backend database. This setup provides a scalable, serverless, and efficient way to manage APIs with flexible querying and data fetching..

Scene 42 (4m 11s)

Complete Steps to Build the Project Step 1: Set Up an AWS Account ● Sign in to your AWS account. ● Navigate to the AWS AppSync service in the AWS Management Console. Step 2: Create an AppSync API ● Click Create API → Choose "Build from scratch" → Enter API name → Select "Create". ● Choose GraphQL as the API type. Step 3: Define a GraphQL Schema ● Go to the Schema section and define your GraphQL types. Example schema for a To-Do app: graphql type Todo type Query.

Scene 43 (4m 15s)

type Mutation schema ● Step 4: Set Up a DynamoDB Table ● Navigate to AWS DynamoDB → Create Table ● Table name: Todos ● Partition key: id (String) ● Enable on-demand capacity mode Step 5: Connect DynamoDB as a Data Source ● In AWS AppSync, go to Data Sources → Create Data Source. ● Select Amazon DynamoDB → Choose the Todos table. ● Grant necessary permissions by attaching an IAM role. Step 6: Create Resolvers for Queries & Mutations ● In AppSync, go to Resolvers and attach the created DynamoDB data source to GraphQL queries/mutations..

Scene 44 (4m 19s)

● Use VTL (Velocity Template Language) for mapping templates. Example createTodo resolver request template: vtl }, "attributeValues":, "completed": } } ● Step 7: Deploy & Test the API ● Use GraphQL Explorer in AWS AppSync to test queries and mutations. Example mutation to add a new todo: graphql mutation { addTodo(title: "Learn AppSync") { id.

Scene 45 (4m 23s)

title completed } } ● Example query to fetch all todos: graphql query } ● Step 8: Secure the API ● In Settings, configure authentication (API Key, Cognito, or IAM). ● Use AWS IAM roles for granular access control. Step 9: Deploy a Frontend (Optional) ● Use React.js, Vue.js, or AWS Amplify to build a UI. ● Install AWS Amplify CLI and connect the frontend to AppSync..

Scene 46 (4m 26s)

Conclusion This project provides hands-on experience with AWS AppSync, GraphQL, and DynamoDB, enabling you to build a serverless GraphQL backend efficiently. You can extend it by adding authentication, subscriptions for real-time updates, and integrating a frontend. Project 8: Serverless Chatbot – Build an AI-powered chatbot with AWS Lex & Lambda. Introduction A serverless chatbot allows users to interact using natural language. AWS Lex provides automatic speech recognition (ASR) and natural language understanding (NLU) to process user queries. AWS Lambda processes user inputs and responds dynamically. This chatbot can be integrated into applications like websites or messaging platforms. Steps to Build a Serverless Chatbot Step 1: Create an AWS Lex Bot 1. Go to the AWS Lex console. 2. Click Create bot → Choose Create from scratch. 3. Enter the bot name (e.g., "CustomerSupportBot"). 4. Select a language (e.g., English). 5. Configure IAM permissions for Lex to access Lambda. Step 2: Define Intents and Utterances 1. Create an Intent (e.g., "CheckOrderStatus")..

Scene 47 (4m 32s)

2. Add Utterances (e.g., "Where is my order?", "Track my order"). 3. Set up Slots (e.g., "OrderID" for order tracking). 4. Configure the Fulfillment to call a Lambda function. Step 3: Create an AWS Lambda Function 1. Go to the AWS Lambda console → Click Create function. 2. Select Author from scratch → Provide a function name (e.g., "LexOrderHandler"). 3. Choose Runtime as Python or Node.js. 4. Add Lex permissions to Lambda (via IAM Role). 5. Write Lambda code to handle chatbot responses and logic. 6. Deploy the function. Step 4: Integrate Lambda with AWS Lex 1. Go to your Lex bot → Select the Intent. 2. Under Fulfillment, choose AWS Lambda Function. 3. Select the Lambda function you created. 4. Save and build the bot. Step 5: Test the Chatbot 1. In the Lex console, click Test Bot. 2. Enter sample queries and verify responses. 3. Adjust responses and logic as needed. Step 6: Deploy and Integrate 1. Deploy the chatbot on Amazon Connect, Facebook Messenger, Slack, or a Website. 2. Use AWS API Gateway and AWS Lambda for a REST API interface. 3. Monitor chatbot performance using AWS CloudWatch Logs. Conclusion.

Scene 48 (4m 41s)

This project demonstrates how to build a serverless AI chatbot using AWS Lex and Lambda. It provides an interactive experience for users and can be customized for various use cases like customer support, FAQs, or order tracking. Project 9: Event-Driven Microservices – Use SNS & SQS for asynchronous microservices communication. Introduction Event-driven microservices architecture enables loosely coupled services to communicate asynchronously. AWS Simple Notification Service (SNS) and Simple Queue Service (SQS) help in achieving this by allowing microservices to publish and consume messages efficiently. In this project, we will: ● Use SNS for event broadcasting. ● Use SQS for message queuing and processing. ● Set up multiple microservices to communicate asynchronously. Complete Steps to Implement This AWS Project Step 1: Create an SNS Topic 1. Open AWS Management Console → Navigate to SNS. 2. Click on Create Topic → Choose Standard or FIFO. 3. Give a name (e.g., order-events-topic). 4. Click Create Topic. Step 2: Create SQS Queues.

Scene 49 (4m 47s)

1. Open AWS SQS → Click Create Queue. 2. Choose Standard or FIFO queue type. 3. Give a name (e.g., order-processing-queue). 4. Enable "Subscribe to SNS topic". 5. Under Access Policy, allow SNS to send messages to SQS. 6. Click Create Queue. Repeat for additional microservices (e.g., inventory-update-queue). Step 3: Subscribe SQS Queues to the SNS Topic 1. Go to the SNS Topic created earlier. 2. Click Create Subscription. 3. Select SQS as the protocol. 4. Choose the SQS Queue created earlier. 5. Click Create Subscription. Repeat this for other SQS queues. Step 4: Deploy Producer Microservice (Publisher) The producer microservice will publish events to the SNS topic. Sample Python Code (Using Boto3) python import boto3 import json sns = boto3.client('sns', region_name='us-east-1').

Scene 50 (4m 52s)

topic_arn = 'arn:aws:sns:us-east-1:123456789012:order-events-topic' message = response = sns.publish( TopicArn=topic_arn, Message=json.dumps(message), Subject="New Order Event" ) print("Message published:", response) Step 5: Deploy Consumer Microservice (Subscriber) The consumer microservice will process messages from the SQS queue. Sample Python Code (Using Boto3) python import boto3.